This piece is the second in our Hottest Jobs in Tech series — a set of op-eds unpacking the most in-demand AI roles. We’re taking a journalistic lens to what these jobs really mean, what companies expect, and how they’re shaping the future of software.

AI researchers now sit at the center of the modern technology economy. Their ideas determine which models scale, which architectures dominate, and which companies will release the next viral platforms.

A decade ago, most worked quietly in universities or industrial labs, publishing papers that only a narrow academic audience read. Today, those same papers guide billion-dollar product decisions.

This article examines one of the most powerful — and least understood — roles in technology today: the AI researcher.

It explores how a handful of individuals have come to shape the direction of the industry, why their influence now extends far beyond academic circles, and what the rest of the market must do to keep pace.

The rise of the AI researcher

The transformation of AI research into industry currency has been astonishing. A decade ago, it was a quiet, academic pursuit; today, it drives the strategies of trillion-dollar companies.

Top researchers at OpenAI, Anthropic, and Meta now command multi-million-dollar packages, sometimes with founder-level equity. These figures reflect both prestige and leverage. A small cohort of scientists now generates the intellectual capital entire markets depend on. The scarcity of that expertise has created a gravitational pull around a few frontier institutions, concentrating both innovation and influence.

For every company outside those circles, the challenge looks different. The constraint is no longer compute or data. It’s the ability to translate research into production.

Most breakthroughs begin as research ideas shared in papers or internal experiments. Their value depends on who can understand them, replicate them, and operationalize them. The race is no longer just about who can publish the next breakthrough. It’s about who can productize it first.

The companies that shorten the distance from research to reality will set the pace for everyone else.

This dynamic reshapes the entire market. The Transformer began as a paper. So did diffusion models. Most ideas never make it beyond the conference stage, but the few that do reset expectations for the entire field.

So, the bottleneck lies not in invention, but in application: how quickly companies can integrate these advances into real products (governed by constraints like latency, cost, compliance, and user experience).

At the frontier, a few hundred researchers push the limits of model design and optimization. Downstream, millions of engineers and data scientists race to make those discoveries usable. The gap between them has become the defining challenge of this new AI economy.

Let me be clear, though:

What distinguishes leaders from laggards is not who employs the most celebrated researcher, but who cultivates research fluency — the ability to absorb, adapt, and apply the ideas emerging from the frontier. It’s a skill that turns theory into execution: reading new papers, reproducing results, and shaping them into reliable, scalable systems.

Ninety-nine percent of companies will never hire an OpenAI-level researcher, and that’s perfectly fine. The much more achievable opportunity lies in building teams that can stand on their shoulders: engineers who can translate invention into impact.

That is where the next generation of competitive advantage will come from.

Who are AI researchers?

AI researchers occupy a very specific place in the technology ecosystem. They operate at the edge of what is currently possible. They design architectures, optimize training methods, and uncover principles that later become the backbone of applied systems. Their work shapes the raw material of progress: the mathematical and algorithmic breakthroughs that others build upon.

A couple of weeks ago, we published the first article in this series on AI Engineers, exploring the role of those who turn research into production. If AI engineers translate, AI researchers originate.

AI engineers take new ideas and make them work under real-world constraints; AI researchers create the ideas that make those products possible. One defines feasibility, the other defines scale.

Modern AI depends on that relationship, and when it functions well, it shortens the distance between theory and deployment.

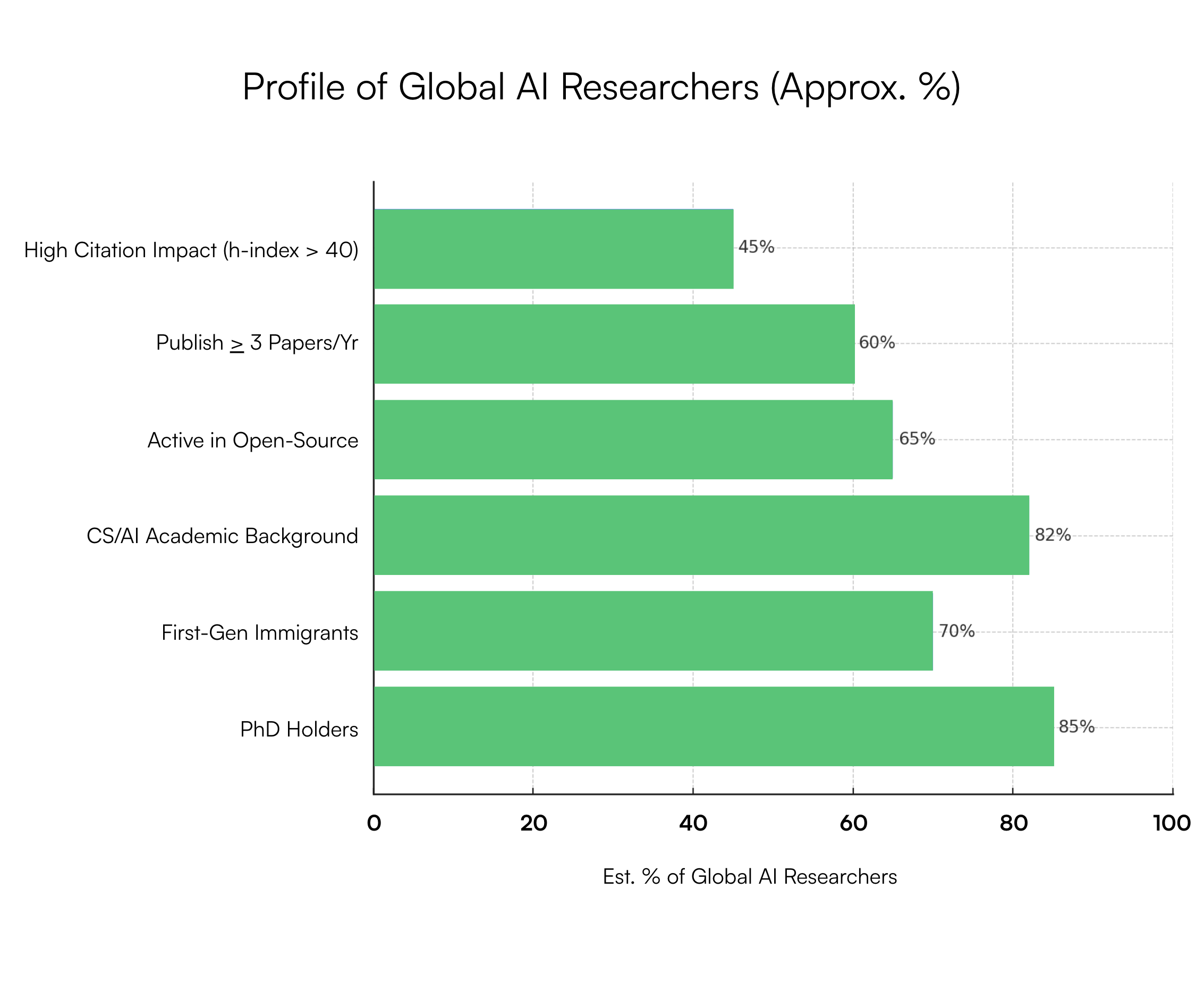

Most researchers come from deeply academic backgrounds. Doctorates in computer science, mathematics, physics, or statistics are common, and publication records at conferences like NeurIPS, ICML, and ICLR are almost expected. Many maintain open-source repositories that quietly become industry standards.

Global AI researchers share common traits like academic background, open-source activity, and high publication output, underscoring how concentrated technical expertise has become.

Increasingly, they are clustered inside a small number of institutions — frontier labs, Big Tech research groups, and chipmakers — where the concentration of expertise, resources, and capital has driven compensation to extraordinary levels.

Yet their influence extends far beyond those labs. The research itself only becomes valuable when others can interpret and apply it. That is where most organizations have agency. Competing with Meta or OpenAI for talent is rarely feasible, but developing research capability internally is.

That capability means finding engineers who can read new papers critically, reproduce results, adapt them to production constraints, and maintain those systems over time. It means hiring and upskilling for research literacy — the ability to understand how new methods work, where they break, and how they might be integrated into real workflows.

Companies pulling ahead in the AI arms race are the ones translating what labs produce into usable systems, closing the gap between research and revenue.

The current boom in context

The market for AI researchers today looks less like a traditional hiring pipeline and more like a geopolitical contest. Scarcity is extreme, competition is global, and the sums involved have surpassed even the wildest expectations from a few years ago.

Meta’s journey: the breaking point

Meta’s Superintelligence Lab became the most vivid example of how far this competition could stretch. By mid-2025, insiders estimated that a large share of its researchers held advanced degrees and international backgrounds, with many operating at senior or principal levels where compensation ran into the millions.

Here’s everyone Meta hired for their Superintelligence team.

Say hello to the new residents of Atherton with their $10M+/yr comp packages, everyone! pic.twitter.com/6MxPtHPOr3

— Deedy (@deedydas) June 30, 2025

The hiring spree drew headlines not only for its speed but for its scale. Reports of eight-figure compensation packages circulated widely, signaling how aggressively Meta was willing to spend to regain technical prestige. Many of the recruits had been on academic tracks just a few years earlier, and were suddenly choosing between life-changing offers in Menlo Park or London.

When traditional acquisitions fell through, Meta turned its attention to individuals. Recruiters scoured conference proceedings and citation databases, targeting anyone with a NeurIPS paper or foundation-model pedigree.

The strategy was simple: if you couldn’t buy the company, buy the people.

For a short time, it worked. The Superintelligence Lab quickly became one of the most talked-about groups in Silicon Valley. Recruiters were told to move with startup-level urgency, offering equity and bonuses that seemed impossible to turn down. Meta successfully poached talent from Apple, DeepMind, and OpenAI.

The optics were spectacular, but so were the costs.

Inside the company, the atmosphere shifted. Burn rates soared. Compensation leaks sparked resentment among long-tenured engineers, who watched new arrivals command multiples of their pay. Investors began asking whether Meta was buying genuine breakthroughs or simply buying headlines. Even internal advocates described the lab as “a company within a company.”

By late summer, the pressure reached its limit. Reality Labs (Meta’s VR/AR division) continued to lose billions, advertising growth slowed, and the AI hiring binge had become impossible to justify. In August, Meta quietly froze new research hiring. Analysts framed it as the natural end of an unsustainable strategy. Even trillion-dollar giants cannot outspend their way to innovation without straining morale, coherence, and investor patience.

The episode revealed a fundamental truth about this market: the arms race has no finish line. Prestige-driven bidding wars can capture headlines, but not necessarily durable advantage. For everyone else, the smarter path lies further downstream — building the systems and teams that can absorb the research, rather than trying to own it outright.

The same few names dominate recruiting chatter today: OpenAI, DeepMind, Anthropic, xAI, Scale AI, and Meta. Chipmakers such as NVIDIA, AMD, and Intel have joined the fray as well, recognizing that if you control the silicon, you want the scientists designing workloads that will define demand.

What stands out is not only the competition but the velocity. Researchers who once spent a decade climbing academic ladders now move from PhD to staff-level titles in a single hiring cycle.

As talent consolidates around a few labs, their gravitational pull intensifies. They attract not only researchers but engineers, product leaders, and investors eager to be close to the frontier.

What do AI researchers actually do?

Strip away the headlines and compensation chatter (which we’ll dive into below), and the role of an AI researcher is basically this: they work at the limits of what is technically and theoretically possible. Their output forms the scaffolding for the next generation of products and models.

Broadly, their work falls into three categories:

- New architectures and algorithms → Designing frameworks like transformers, diffusion, or retrieval-augmented methods.

- Benchmarks and leaderboards → Improving state-of-the-art results on datasets such as MMLU, ImageNet, or WMT.

- Open-source contributions → Releasing code and training pipelines that quickly become reference points for the field.

A typical day for a researcher involves running large-scale experiments, tweaking architectures, and debugging optimization failures in CUDA or PyTorch. Many spend weeks designing ablation studies, writing papers, or coordinating with collaborators across academia and industry on subjects like multimodal alignment, interpretability, or model safety.

The irony is that most days yield little visible progress. The work is dominated by failed hypotheses, dead ends, and incremental refinements. One researcher described it as “proving ten different ways something doesn’t work.” Another put it more succinctly: “Ninety percent failure, ten percent synthesis.”

And yet, that slow grind is precisely what makes the rare breakthroughs so powerful. The “mistake” that launched diffusion models began as a failed training run. GPT-style language models and reinforcement learning from human feedback (RLHF) both emerged from iterative experiments that initially seemed unremarkable. These breakthroughs do not happen overnight. They are the accumulated result of patience, persistence, and deep theoretical understanding.

When a new method succeeds, it can redefine an entire segment of the industry. Transformers changed how systems process sequential data. Diffusion unlocked a wave of generative image and video applications. RLHF turned large language models from research curiosities into usable assistants.

The upside of a single discovery is so large that each researcher represents, in a sense, a venture-scale bet.

But those bets only pay off when others can make use of them. Research alone does not create impact; translation does. A model architecture or training method has value only when it is replicated, deployed, and maintained under real-world constraints such as latency, cost, and compliance.

This is where most organizations find their point of leverage. Few can afford to bankroll frontier research teams, but any company can invest in people who bridge the gap between state-of-the-art benchmarks and real-world products. That capability — reading a paper, reproducing its results, and implementing a practical version at scale — is the most direct and measurable way to turn research into advantage.

Skills and background

The archetype of an AI researcher’s résumé is easy to picture: a PhD in computer science, mathematics, or physics; multiple papers at conferences such as NeurIPS, ICML, or ICLR; perhaps a postdoctoral stint at a top lab; and a citation record in the hundreds. That profile still defines the upper tier of the field—and it helps explain why compensation has accelerated so quickly.

Beneath the academic credentials, the role depends on a layered mix of skills:

- Foundational literacy. Strong grounding in linear algebra, probability, optimization, and statistical learning theory. These are the tools that make model design and analysis possible.

- Applied machine learning. Practical understanding of model implementation, error analysis, and reproducibility. Researchers must be able to turn mathematical ideas into running experiments.

- Systems and infrastructure. Proficiency in programming (Python, C++, CUDA) and experience with experimentation frameworks such as PyTorch, JAX, or TensorFlow. Many breakthroughs come from finding new efficiencies in how models are trained and scaled.

- Experimental research. Skill in hypothesis design, ablation studies, benchmarking, and collaboration with other scientists. A track record of publication and open-source contribution serves as both validation and community signal.

- Frontier innovation. Deep specialization in areas such as reinforcement learning, scaling laws, multimodal systems, interpretability, or alignment—the domains that push the science forward and often define the next generation of products.

Together, these layers form the practical architecture of an AI researcher’s expertise: theory at the base, experimentation in the middle, and innovation at the top. It is a stack that few master end-to-end, but the closer a researcher gets to spanning it, the more valuable their work becomes.

For most companies, this is where the gap appears. Few need staff engineers who can prove new scaling laws or publish at NeurIPS. What they do need are engineers who can absorb these breakthroughs quickly, replicate them in-house, and adapt them to production realities.

This is where the skills diffusion curve begins to take effect. Today’s research focus — say, multimodal fusion — becomes tomorrow’s engineering competency, such as building systems that understand both video and text. Within 12 to 18 months, what appears cutting-edge in an academic paper often becomes a required skill in a job description.

The AI researcher role will remain concentrated among PhD-heavy teams at the top, but the broader impact lies in how those skills disseminate.

That diffusion dynamic also explains the extraordinary attention on compensation. The market is not only paying for research; it is paying for proximity to the talent and ideas that move theory into practice.

Salary benchmarks, market heat

If you only read the headlines, you’d think every AI Researcher is walking around with a $50M golden ticket. The truth is messier. Yes, there are outlier packages that dwarf first-round NFL draftees’ salaries, but those are exceptions that warp perception.

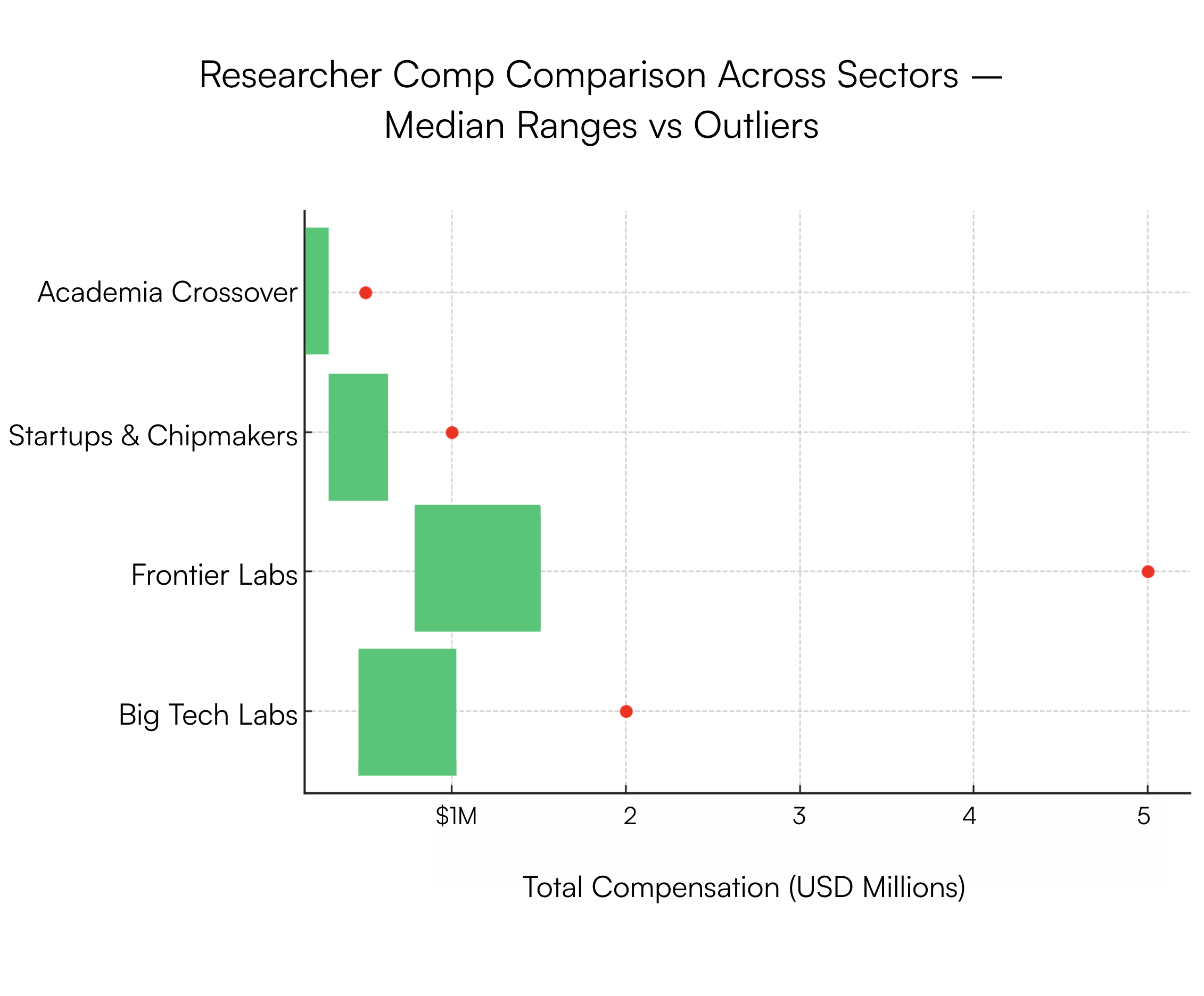

The median reality looks more like this:

- Big Tech labs (Meta, Google, Microsoft, Apple) → $500K to $1M+ total comp for staff- and principal-level researchers.

- Frontier labs (OpenAI, Anthropic, xAI) → packages stretch higher, often with equity or long-tail bonuses that push into the multi-million range.

- Startups and chipmakers → lower cash, sometimes offset by equity gambles that could pay off (or not).

- Academia crossover → far less lucrative, but often combined with consulting or dual appointments at labs.

A quick search on Levels.fyi indicates that the median base comp for the title sits at $168K. Anything above $380K, and you’re in the 90th percentile.

What I found when I went to the job boards: Most of the roles labeled “AI Researcher” aren’t the same breed as the celebrity PhDs getting courted by Meta or OpenAI. They’re often hybrid positions — closer to applied research engineers — where the mandate is to implement and adapt methods, not to invent the next scaling law.

The job-board researcher is a doer. They’re the ones running experiments, fine-tuning models, or building applied prototypes inside commercial teams. The frontier researcher — the one commanding hedge-fund money — is a paper-driven inventor whose work resets the playing field itself. Both are vital, but they occupy entirely different strata of the market. Calling them by the same title obscures the split and confuses job-seekers, employers, and even investors.

The scarcity at the top is what drives the outsized numbers. There may only be a few hundred individuals globally capable of pushing theory forward in ways that create new billion-dollar categories.

Meanwhile, thousands of companies post “research” jobs that live further downstream, closer to engineering.

What matters more than the absolute numbers is the distortion effect. The minute one lab dangles $10M at a candidate, every other lab has to lift their own bands just to stay credible. And when those numbers leak into the open, engineers and data scientists downstream start benchmarking themselves against impossible figures, fueling even more churn in an already scarce market.

Recruiters and VCs alike have started to vent publicly, pointing out that even offers north of $2M aren’t enough to compete with the gravitational pull of OpenAI or Anthropic:

Meta is currently offering $2M+/yr in offers for AI talent and still losing them to OpenAI and Anthropic. Heard ~3 such cases this week.

The AI talent wars are absolutely ridiculous.

Today, Anthropic has the highest ~80% retention 2 years in and is the #1 (large) company top AI… pic.twitter.com/YSv5UNV5H2

— Deedy (@deedydas) June 10, 2025

We have to acknowledge the bifurcation: researchers will always cluster at the very top, while the rest of the world focuses on cultivating researcher-adjacent capacity.

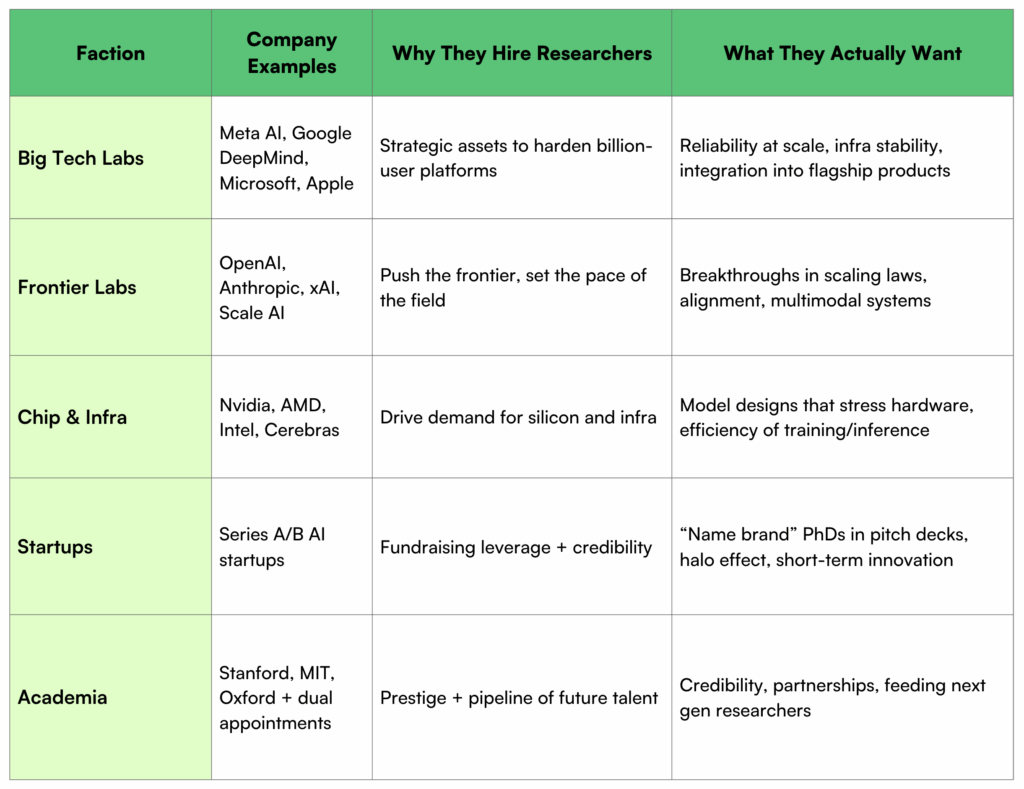

If you zoom out, the AI Researcher market isn’t one market at all — it’s a patchwork of competing agendas. The same job title means radically different things depending on who’s paying the bill. Let’s once more go through the categories of companies to understand why each hires AI Researchers to their team:

- Big Tech labs (Meta AI, Google DeepMind, Microsoft Research, Apple). These companies treat researchers as strategic assets. The job is less about publishing for prestige and more about hardening the next billion-user platform. Think Copilot, Gemini, LLaMA — all fueled by researchers, but housed inside industrial-scale engineering orgs.

- Frontier labs (OpenAI, Anthropic, xAI, Scale AI). Here the mandate is existential. Researchers aren’t hired to polish existing products; they’re expected to push the frontier itself — scaling laws, alignment, multimodal systems. The compensation reflects the risk and the hype.

- Chip & infra players (Nvidia, AMD, Intel, Cerebras). Their bet is obvious: if you control the silicon, you want the scientists designing workloads that drive demand. Many researcher roles here skew toward systems optimization and the efficiency of training and inference at scale.

- Startups. The wildcard. A Series A/B founder landing even one top-tier researcher is a fundraising weapon. These hires get paraded in pitch decks because they indicate credibility to investors. The reality, though, is many startups can’t hold them long — frontier labs or Big Tech eventually outbid.

- Academia crossover. Universities still matter. Some researchers hold dual appointments, like teaching at Stanford while also on payroll at a lab. Others jump back and forth, using academic credibility as leverage in industry negotiations.

The through-line here is that every faction wants researchers for a different reason:

- Big Tech → stability + productization

- Frontier labs → breakthroughs + IP

- Chipmakers → demand creation + infra optimization

- Startups → credibility + fundraising

- Academia → prestige + pipeline

The same title often hides five different job descriptions. Which means if you’re not in one of those top brackets, you need a different playbook altogether.

Pro tip: Instead of burning cycles trying to lure a unicorn into your org, invest in the layer that makes their work usable. Build a team of engineers who can take what Meta publishes and turn it into something your customers can actually touch.

Why companies value AI researchers

Companies don’t throw $10M+ at researchers out of charity. They do it because a single breakthrough can tilt the entire market. They know that:

- Breakthroughs create new S-curves. Transformers gave us GPT. Diffusion gave us generative art. Reinforcement learning with human feedback gave us usable chatbots. Each started as a paper, then cascaded into billion-dollar product categories.

- IP is currency. Researchers publish papers and file patents that become defensive moats. Labs hoard these not just to ship products, but to lock competitors out of the next architecture.

- They’re talent magnets. Drop one respected researcher into your lab and suddenly you attract three more plus some publicity. The halo effect is real: engineers, PMs, and even investors cluster around them.

- They future-proof perception. Having researchers signals to the market — and to regulators — that you’re serious about advancing AI responsibly, not just repackaging APIs.

The paper itself is not the moat. The ability to operationalize it is. In the end, breakthroughs are just the raw material. Competitive advantage lies in what happens next: who can turn an idea into a product before the rest of the world even finishes reading the paper.

That level of influence does not come easily. The path to becoming an AI researcher is long, selective, and rooted in deep academic and technical mastery.

How to become an AI researcher

The path into AI research remains one of the narrowest and most demanding in the technology world. For most, it follows a well-worn academic trajectory:

- Academic track. Bachelor’s degree followed by a PhD in computer science, mathematics, physics, or a related field, often supplemented by a postdoctoral fellowship or research residency.

- Publication track. A steady output of papers at conferences such as NeurIPS, ICML, or ICLR, alongside a growing citation record and active collaboration with peers.

- Lab appointment. Eventually, a position at a leading research organization (DeepMind, OpenAI, Meta, Anthropic, or a comparable institution) where the work centers on advancing model architectures and training methodologies rather than building products.

That progression explains why the majority of top-tier researchers hold doctorates and why global mobility has become the norm.

The leading labs recruit internationally, competing for a small set of candidates with both deep theoretical expertise and a record of publication.

There are, however, alternate routes. A small number of practitioners have entered the field through unconventional means — open-source contributors whose repositories gained critical mass, Kaggle competitors with repeated leaderboard wins, or applied engineers whose innovations blurred the line between research and product. These examples are rare, but they prove the door is not fully closed.

For anyone aspiring to join this world, the bar is this:

- Strong grounding in mathematics and theory.

- Proficiency in Python, with working fluency in performance-level languages such as C++ and CUDA.

- A visible technical footprint—papers, repositories, benchmark results, or reproducible experiments.

- Focused expertise in a core domain such as reinforcement learning, scaling laws, multimodal systems, or interpretability.

- The patience to pursue ideas that fail nine times out of ten.

For everyone else, the more practical play is not to pursue the PhD path, but to ride the diffusion curve: applying the breakthroughs researchers produce to real systems and products. That is where most of the industry’s opportunity lies — translating discovery into deployment.

The future of the AI researcher role

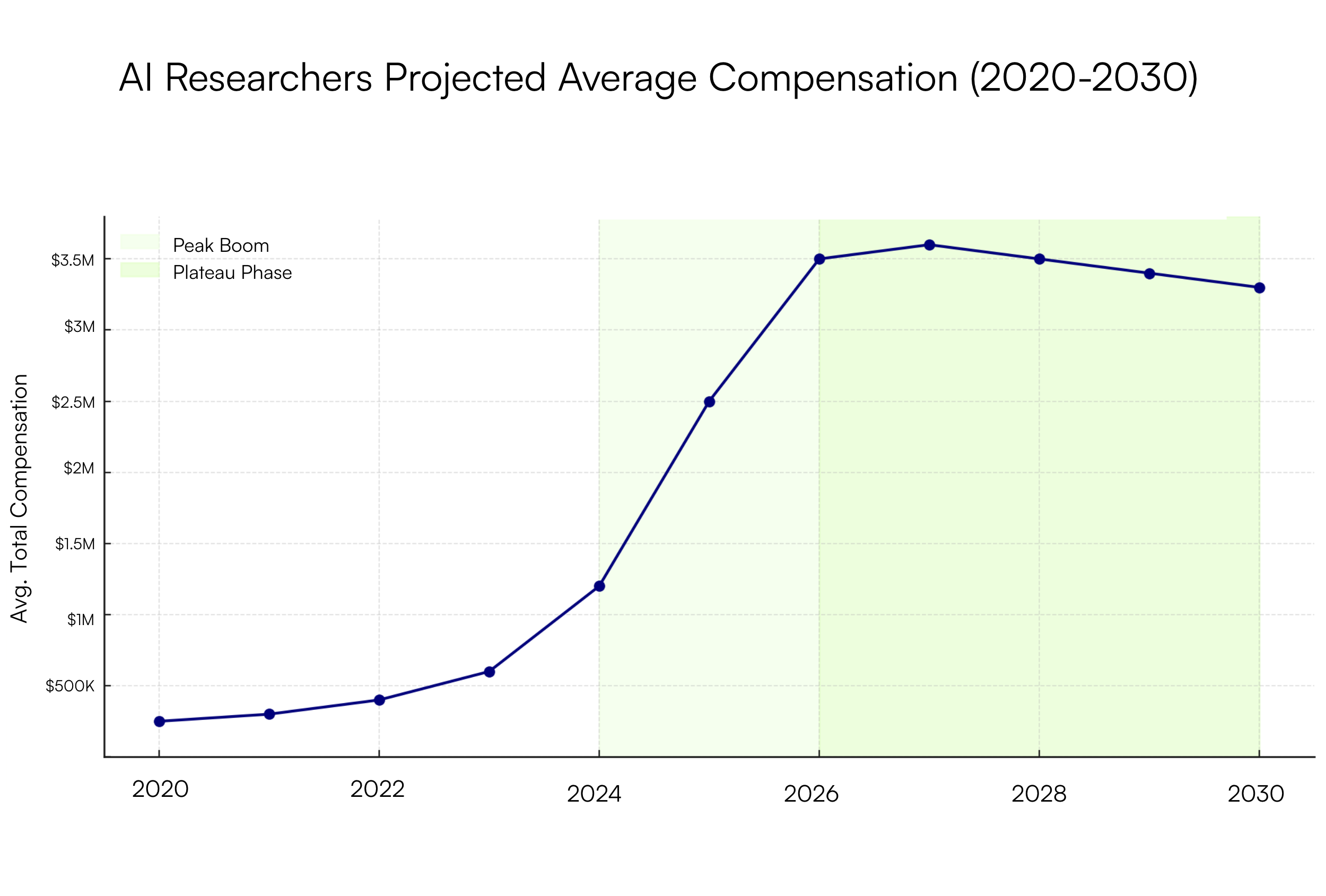

The AI researcher boom will not look the same in five years. The frenzy we see today — where top scientists can ignite bidding wars and command seven-figure signing bonuses — resembles the peak of an asset bubble. But just as markets mature, so too will this discipline. The rarefied circle of PhD-heavy research talent will remain valuable, yet its shape and purpose inside the technology economy will evolve.

What comes next is a rebalancing: from celebrity researchers to scalable, systematized R&D pipelines, and from breakthrough hype to integrated, application-driven AI development.

Here is how that evolution is likely to unfold:

- Consolidation of talent → a handful of labs continue to hold most of the elite.

- Inflated salaries cool → the $25M-plus deals fade; premiums persist.

- Closer integration → research and engineering operate in tighter feedback loops, with shared tools and co-development cycles.

- AI-augmented research → models assist in experiment design while humans interpret and refine results.

- Diffusion accelerates → the gap from arXiv to GitHub to production shortens from years to months.

Together, these shifts point toward the professionalization of AI research — moving it from a frontier discipline to a core capability embedded within major tech-driven organizations.

Analysts expect compensation growth to stabilize after 2026. The “peak boom” years of 2023–2026 were and are driven by intense competition among frontier labs. As tools mature and workflows become more automated, that curve flattens. Pay remains high but increasingly linked to strategic impact rather than raw scarcity.

The superstar researcher will endure but breakthroughs that once required specialized labs will also cascade outward: millions of engineers fine-tuning open models, startups deploying domain-specific agents, and entire industries integrating research-level capabilities into everyday software.

The future of AI research will be defined by more people doing research-like work aided by better tools, faster feedback, and a deeper connection between experimentation and execution.

Conclusion

The AI researcher has become the archetype of modern technical prestige. They’re courted like an athlete, compensated like a fund manager, and tasked with inventing what comes next. Yet most companies will never need to hire one. What they need is the capability to keep pace: to understand the research, absorb it quickly, and turn it into products that matter.

Research defines the frontier; engineering determines whether you capture it. Where to focus, if you’re reading this: build the muscle to translate invention into impact both repeatedly and responsibly, at scale.

The frontier will continue to shift. The question every CTO should be asking now is whether their teams can adapt fast enough to move with it.

Handpicked Related Resources: