This piece is part of our Hottest Jobs in Tech series — a set of op-eds unpacking the most in-demand AI roles. We’ll take a journalistic lens to what these jobs really mean, what companies expect, and how they’re shaping the future of software.

AI Engineer: title, trend, or trojan horse

The hottest job in tech right now is also the most predictable. Five years ago, “AI Engineer” wasn’t even listed as a role on job boards. Today, it’s sprouting up everywhere.

Every few decades, we have a platform shift that spawns a new set of roles. Web gave us web dev. Mobile gave us iOS and Android. AI is giving us AI Engineers.

The listings look strong: bases north of $250,000 at top firms, with equity pushing total comp into the high six figures.

But scroll a little deeper and the variability hits. Recruiters I spoke with told me the same story — in one posting an AI Engineer looks like MLOps, in another it’s a SWE wiring frontier models, and sometimes the role isn’t clearly defined at all. Early postings span MLOps to product-heavy SWE. That’s normal in new roles. Scope starts broad, then standardizes as patterns emerge.

After weeks of interviews and post analysis, the picture is consistent with past cycles: a role forming around the applied layer. AI Engineers bridge research, product, and software so intelligence ships, scales, and creates value.

What’s also notable about this role is how it’s hardening into an emergent identity, a bridge role that didn’t exist until LLMs forced the industry to invent it.

Strip away the noise and you find a role that is crystallizing fast and will ultimately dictate which AI ideas make the leap from demo to dependable product.

In this article, my goal is to unpack what the role means today, what AI Engineers actually do, and why the title could come to define the small group of next-gen builders shaping the coming decade of software.

The role every company needs, now standardizing

“What’s our AI story?” has replaced “What’s our mobile app?” and roles follow strategy. The AI Engineer mandate is clear: take a model out of the lab and make it useful at scale.

At its simplest, the AI Engineer is the person who makes models usable in the real world. These engineers aren’t publishing papers or simply tuning hyperparameters, but wiring models into products that millions of people touch every day. That’s why some people call this evolving breed of builders the “new full-stack” — they sit between research, product, and engineering, translating academic breakthroughs into revenue.

That’s the mandate, anyway.

The role is still slippery. Because it overlaps with so many neighbors, the title ends up meaning ten different things depending on which company you ask, with job postings reading like grab bags of mismatched expectations — part data scientist, part infra engineer, part magician.

Data scientists live in the realm of experiments and analysis.

ML engineers focus on training and deploying models.

AI Engineers, at least in practice, blur both while carrying a set of responsibilities closer to a product engineer: make it work, make it scale, make it valuable. And use AI to do it.

Vinija Jain, an engineer at Google (and ex Head of AI at a stealth startup) told me the titles blur even more at FAANG scale:

“AI Engineer and ML Engineer are often used interchangeably. Sometimes it’s even ‘Software Engineer, Machine Learning.’ The role is [very much] applied in nature. [That means] productionizing research and adapting it to an existing product across any modality, from model changes and feature implementations to evaluation.”

Vinija contrasted it with research roles: “Research is more fundamental — pushing the model forward and publishing results — though, in practice, the lines aren’t hard. You’ll see MLEs publish and researchers ship product. The beauty of this field is you don’t have to color inside the lines.”

That fluidity isn’t only visible inside of Big Tech. It’s something practitioners on the ground are quick to point out, too.

“Think of AI Engineers, basically, as translators,” another practitioner told me during a meetup at a local Santa Clara coffee house. “The irony is, without us, all the research in the world just sits in GitHub repos,” he shared, with the kind of matter-of-fact tone that could only come from living through this real-time shift. “But most execs still think of us as glue code.”

It’s a paradox: the people wiring AI into products are indispensable, but many (especially junior-level) are still treated like support staff instead of the ones holding the whole system together.

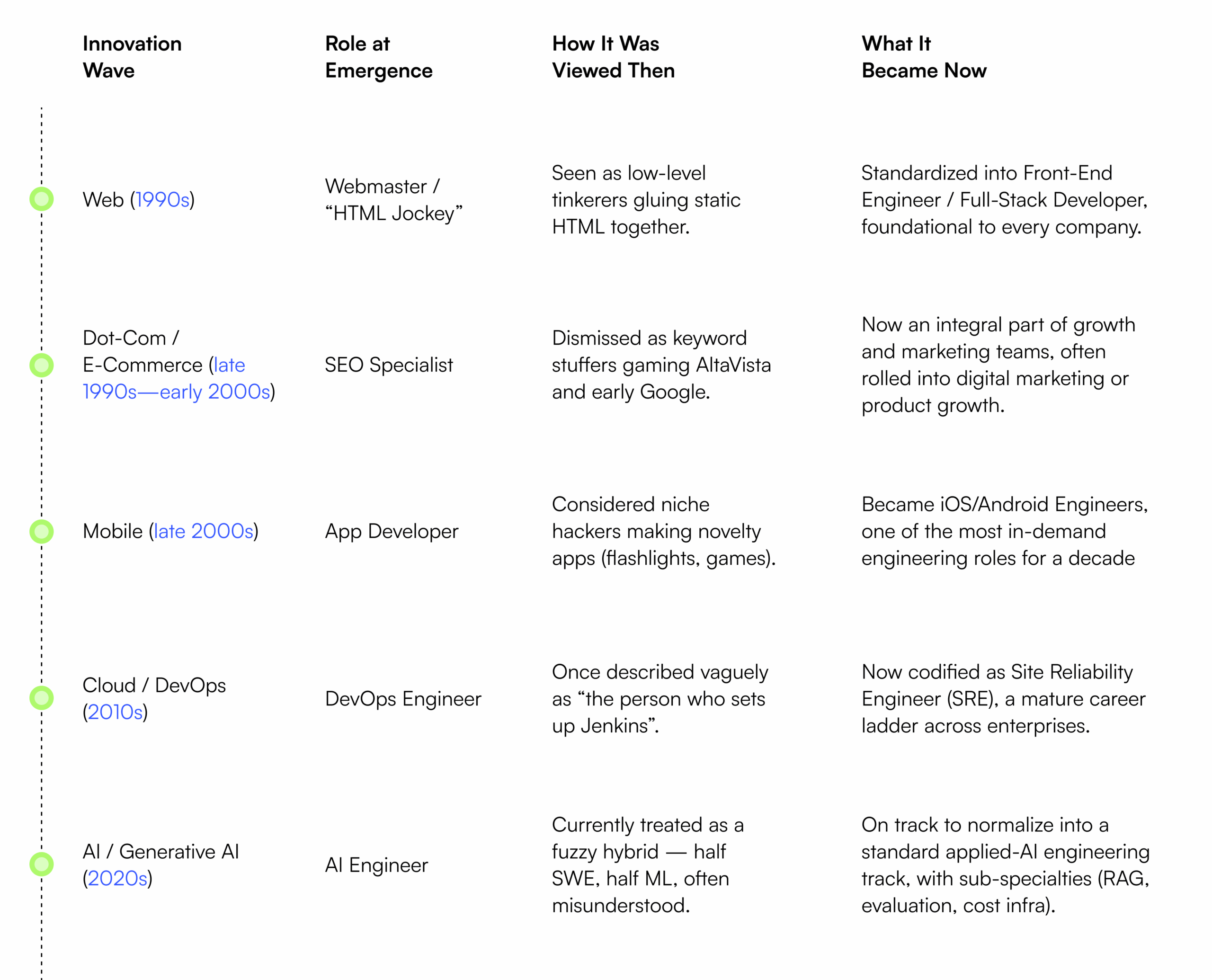

And that’s where this role seems to sit today. Applied roles often feel like glue at first, then become core. In the 90s, ‘HTML jockeys.’ In the 2010s, ‘app devs.’ Today, AI Engineers. But that’s how it works in these cycles: Novelty → necessity → norm.

Every tech boom brings its own “weird” new roles that sound fuzzy at first, then harden into the default. We’ve seen it with the web, mobile, and cloud. AI Engineers are simply the next turn of that wheel.

From webmasters to DevOps to AI Engineers, the story repeats: new roles start as experiments, then harden into the infrastructure careers every company depends on.

Beyond prompts: the unsexy work that ships

Ask ten AI Engineers what they work on and you’ll hear different backlogs with the same backbone: APIs and pipelines, fine-tuning and evals, retrieval quality, latency and cost, privacy and compliance.

Bassim Eledath is a San Francisco-based AI Engineer who specializes in voice AI systems. As he told me, the hardest challenges fall into three buckets: context quality, model nondeterminism, and domain constraints. Those buckets are becoming the shared playbook teams hire and train against:

- Context gathering where garbage in equals garbage out

- Nondeterminism, meaning what makes LLMs creative also makes them unreliable, and

- Vertical-specific hurdles (e.g., strict compliance of healthcare, latency demands of voice, or the explainability requirements in finance)

Those buckets are becoming the shared playbook teams hire and train against.

In voice systems the stakes rise: a five-second delay that feels tolerable in chat is unbearable when spoken aloud, and ‘turn detection’ (knowing when the human is done talking) remains one of the hardest open problems.

This mix of unpredictability and domain friction isn’t an outlier. It is the job.

On paper, AI Engineers are expected to build, fine-tune, and integrate models, develop APIs and infrastructure to deploy at scale, collaborate with product teams to design AI-powered features, and monitor performance across bias, drift, latency, and cost.

But in practice, it’s a spectrum.

At an enterprise bank, an “AI Engineer” might be the person hardening compliance pipelines. At a startup, they might just be the scrappy developer duct-taping OpenAI APIs into a working prototype.

Scope flexes by stage. At a bank, the AI Engineer hardens compliance and reliability. At a startup, the AI Engineer prototypes and then fortifies. That range is narrowing as patterns, frameworks, and evaluations mature.

A software engineer can lean into applied AI without needing a PhD. An ML engineer can claim the title by showing product-level impact.

Titles are elastic, salaries are varied, and if you’ve shipped something AI-powered into production, the market rarely cares what exact mix of skills got you there.

Curiosity and resilience remain the hiring tell, and they’re being paired with clearer skill maps as the role professionalizes.

What AI Engineers are expected to know

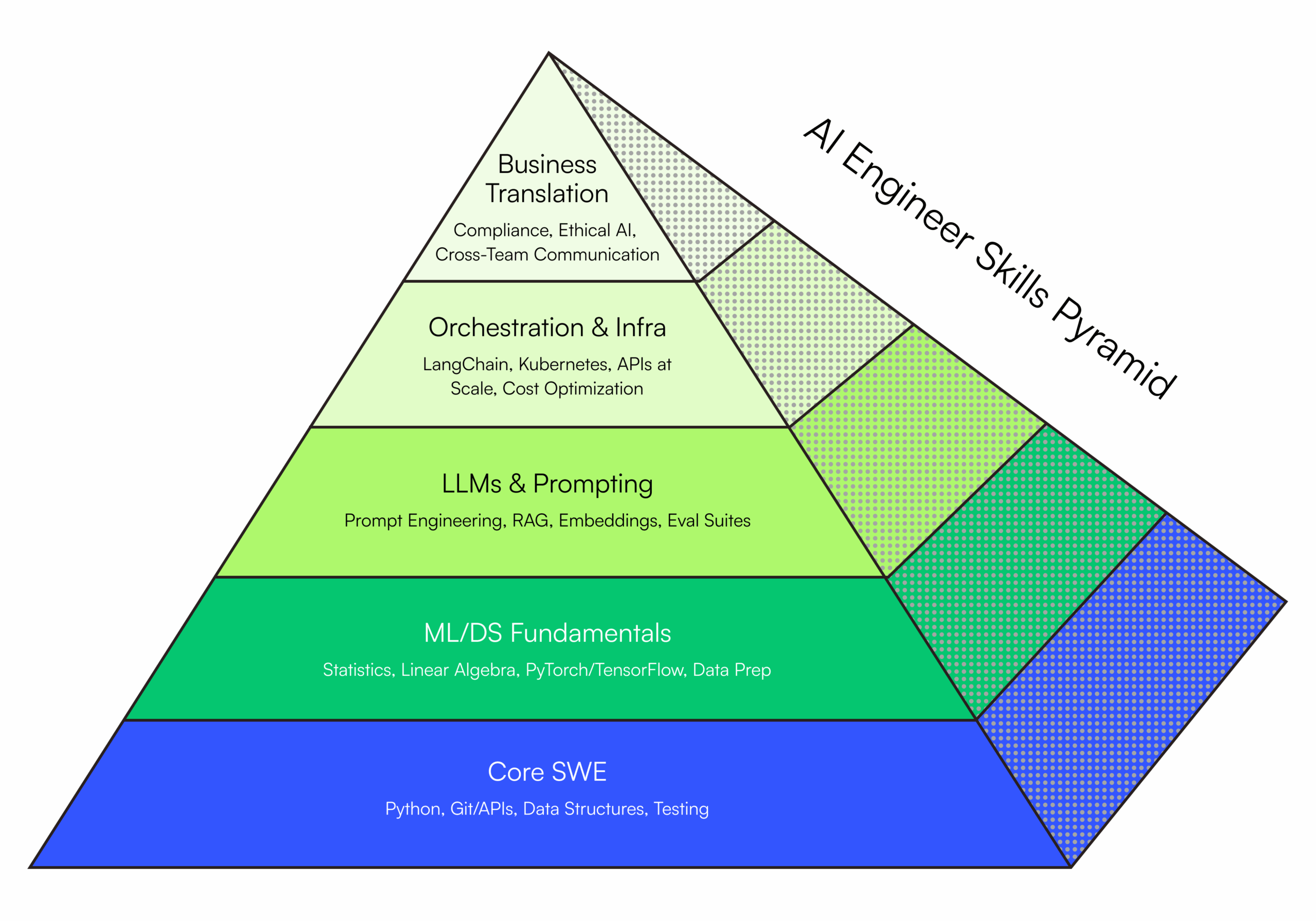

Every era settles on a toolkit. For AI Engineers, the core is now clear: Python, PyTorch or TensorFlow, cloud services, data pipelines, and the applied AI layer that turns models into products. That applied layer means orchestration, retrieval, evaluation, and cost control.

In practice, this looks like multi-agent workflows, shared memory and context management, evaluation harnesses, routing and caching, and guardrails that keep latency and spend in check.

The new piece is the layer most CS programs don’t teach. You need to:

- Orchestrate prompts and tools across tasks

- Wire retrieval-augmented generation that actually returns the right facts

- Build and maintain evaluation suites for quality, drift, bias, and safety

- Choose and integrate providers wisely (OpenAI, Anthropic, open source) based on constraints

- Optimize inference for cost, throughput, and reliability

On paper this can sound abstract. In reality, the next wave of AI Engineers spends as much time inside orchestration and evaluation dashboards that coordinate memory, prompts, agents, and routes as they do inside a traditional IDE.

Based on dozens of job descriptions and a few practitioner interviews, a consistent core of sought-after “hard skills” keeps surfacing. They include:

- Programming fluency (Python)

- ML/DL frameworks (PyTorch, TensorFlow, HuggingFace)

- NLP and prompt engineering

- Model evaluation and bias/drift detection

- Deployment and cloud infrastructure (APIs, containers, Kubernetes)

- Cost and latency optimization

- Data pipelines and data engineering

- Statistical and mathematical grounding

- Ethical AI awareness

- Cross-team communication and collaboration

- Rapid prototyping and the ability to ship fast

This is no longer a grab bag. It is the baseline stack hiring teams screen for.

As Eledath noted, the habits he carried from data science were less about algorithms and more about how you treat data itself. He told me the transferable skill he’s embraced most was simply “looking at data closely.” Whether predicting churn or debugging LLMs, the work reduces to aligning the output distribution with the expected distribution. He emphasized that the most underrated skill is embracing non-determinism — something DS/ML folks prepare for but traditional software engineers struggle with.

But, the soft skills also matter just as much. Unsurprisingly, the best AI Engineers aren’t the ones who can recite transformer architectures from memory, but those who can sit with a product manager, understand a business need, and quickly map it into an AI workflow.

That’s the “new full-stack” framing you hear tossed around in high tech circles: not just knowing how to code, but knowing how to bridge code, models, and product reality.

How developers are stepping into the role

Most AI Engineers I’ve talked to didn’t start with the title. They were software engineers, ML engineers, or data scientists who got pulled into the AI wave as the work appeared.

That mirrors past waves, where real projects trumped formal syllabi.

I spoke to one developer In Bengaluru who started out maintaining cloud infra for a retail startup. When his team needed to experiment with a chatbot, he bolted OpenAI’s API onto their stack as a quick hack. That experiment snowballed, first into a customer-facing product then into a promotion. He joked that the job found him before he ever thought to call himself an “AI Engineer.”

That led me to another stark realization: What differentiates this new breed isn’t credentials, college degree, or geographic location; it’s the raw ability to learn and ship. Having a GitHub repo with a working RAG system or a Copilot-like integration in production is unquestionably worth more than another certificate.

YouTuber and ex-WhatsApp engineer Jean Lee’s research showed that some companies are slapping “AI Engineer” on certain roles and quietly demanding 8 or 10 years of ML experience or even a PhD. Like all jobs, most of these roles still likely attract applicants who don’t meet the hard “requirements” but want to toss their name in the hat anyway (which explains why applicant pools look like avalanches with thousands of résumés for a single coveted position).

And, finally, the market has noticed. Companies have slowed on scanning résumés based on university or whether you have an advanced degree. Instead, they’re putting a premium — and a nice price tag — on real, provable AI skills.

The path isn’t linear, but the pattern is clear:

Start by wiring AI into whatever stack you’re already in, build something ugly that works, share it in public, and keep iterating. In this market, titles are elastic and hype runs hot, but the strongest quality you can have is proof you can ship.

That’s why the conversation about AI Engineer salaries has become as charged as the job title itself.

Paychecks, outliers… and reality

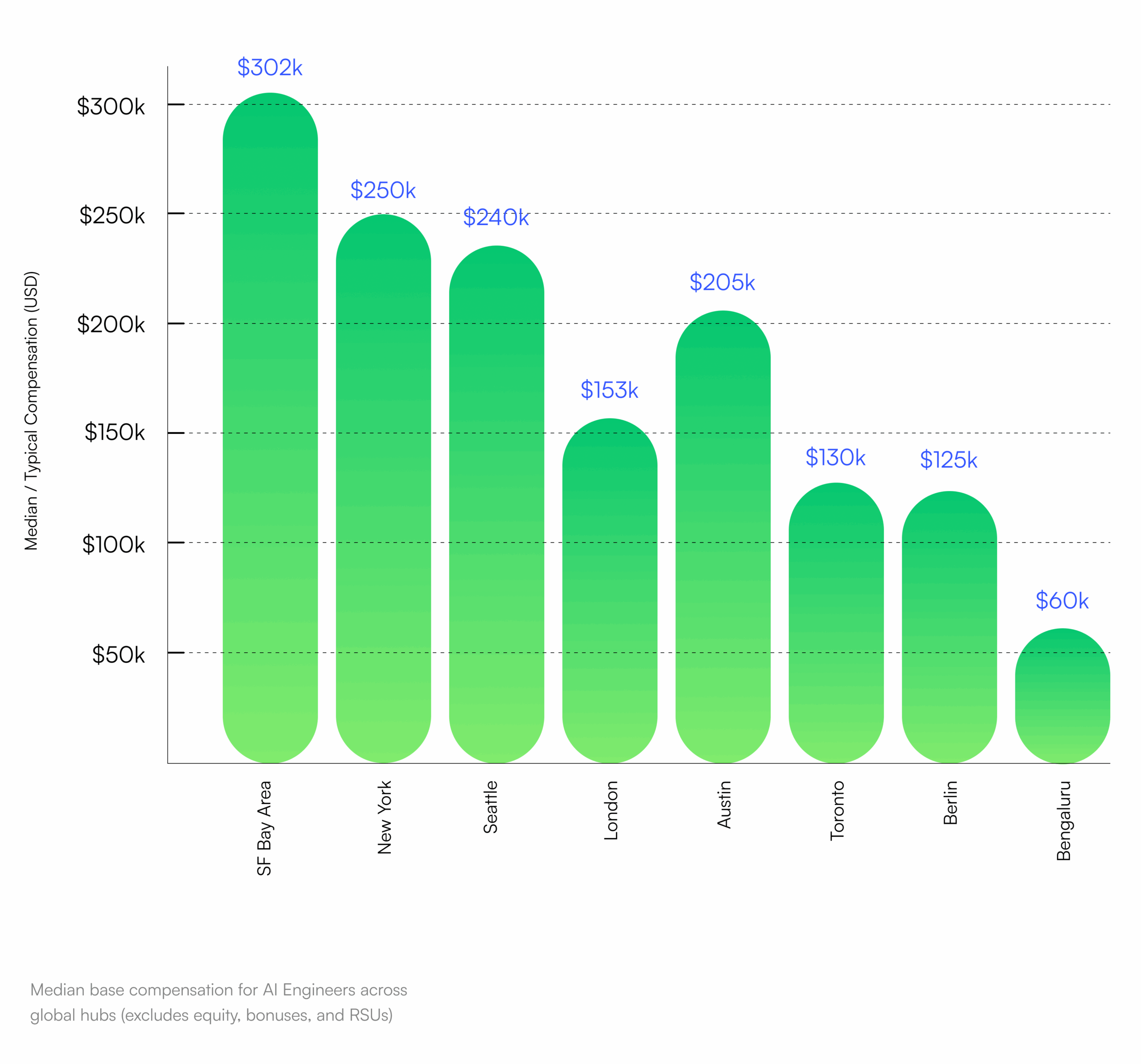

Overall, base compensation for AI Engineers is impressive and growing. Levels.fyi and other crowd-sourced data place many roles in the $170K–$230K band, with top labs and funds sometimes exceeding that.

But to understand what senior AI talent really earns, you have to peel back one more layer.

Total compensation packages often look very different from the base headline. Many AI Engineers today receive large equity grants, profit-participation units, performance bonuses, and signing or retention awards that can double or even triple their take-home over time. In some cases, these instruments create an upside worth far more than cash salary — but also make the realized value more volatile, tied to market conditions or company performance.

Across major tech hubs — New York, Seattle, London, Austin, Toronto, Bengaluru — base salaries for AI Engineers tend to hit the upper end of local senior engineering bands. The Bay Area and New York top out at ~$300K for senior roles (especially for senior or executive-tier roles at labs like Anthropic or OpenAI, or inside hedge funds racing to deploy AI talent):

Still, when you factor in equity and incentives, many AI Engineers at well-funded companies are pulling in 2×–3× the base numbers — a stark reminder that the “real” paycheck in AI engineering is often a moving target.

Most live postings at established companies (LinkedIn, September 2025) confirm the picture (most roles in the $170K–$230K band, with only a few creeping above). Generous, yes — but not the cartoonish, unicorn-level numbers that make the rounds on Twitter, and definitely not what the average mid-career SWE with 5–10 years of experience should expect.

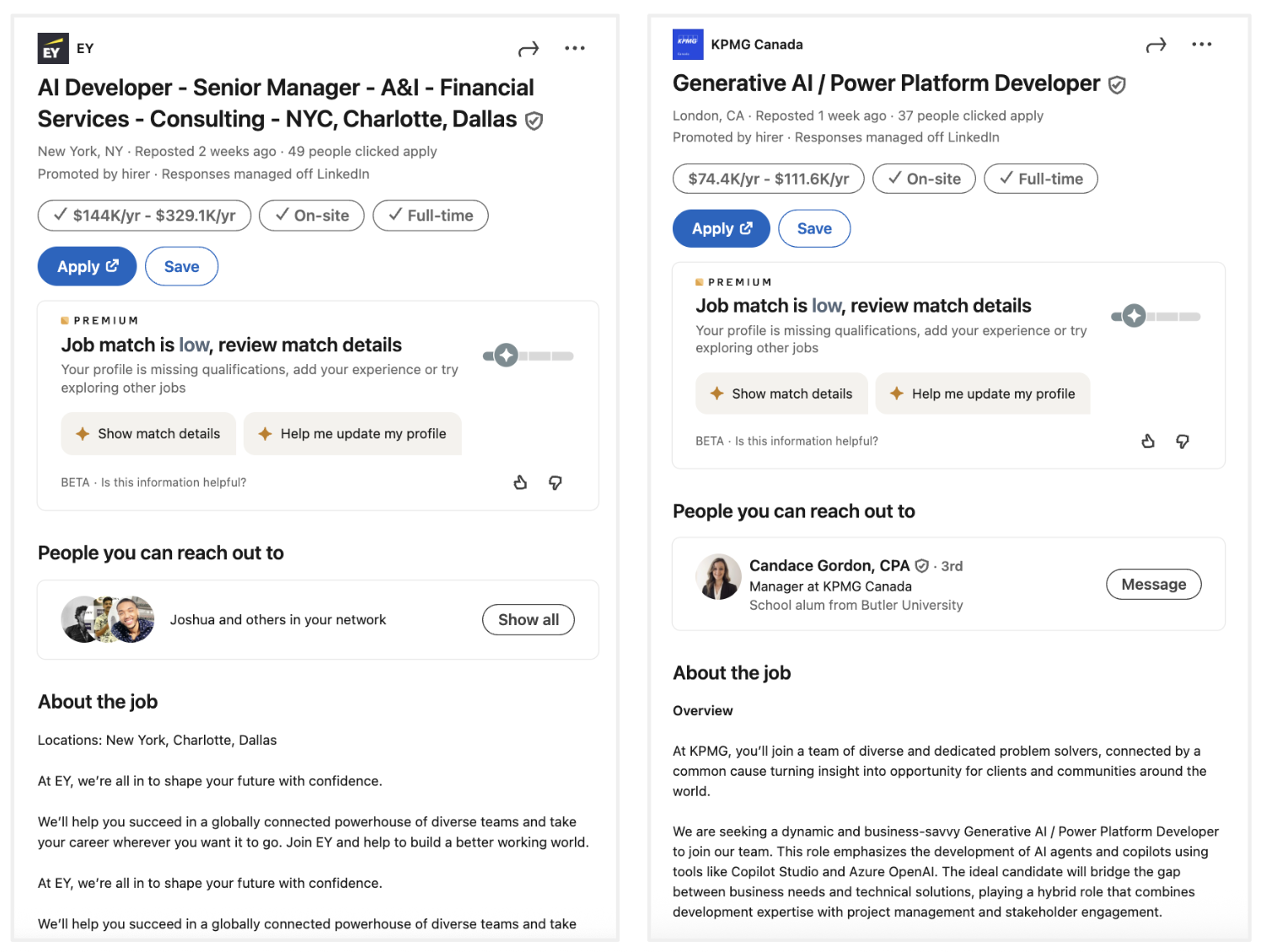

I pulled a few live AI Engineer postings (September 2025) just to compare stated ranges. Most sit right in that mentioned pocket, with only a few creeping above:

What stood out even more than the pay bands were the title dissimilarities. Founding AI Engineer. Applied AI Engineer. Conversational AI Engineer. Senior AI Engineer – AI Product. The labels vary, but the underlying need is the same: someone who can drag LLMs out of the sandbox and wire them into production.

The branding shifts with the company stage, sector, or even a hiring manager’s imagination. But the overall insight is clear: demand is accelerating.

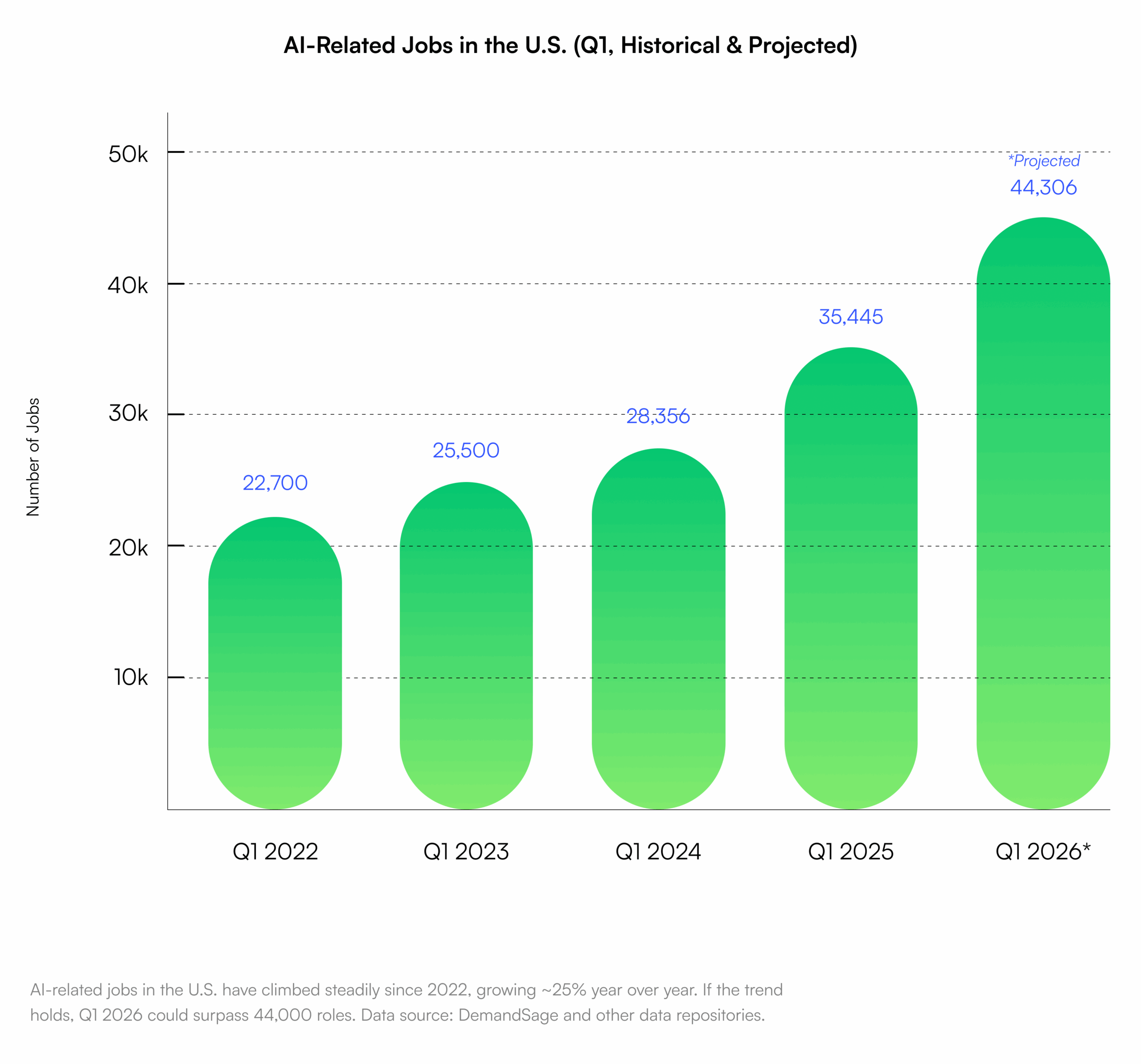

In Q1 2025, ~35,445 U.S. roles were explicitly flagged as AI-specific (broader postings mentioning AI skills — across titles from SWE to data science — jumped from ~66,000 to ~139,000 between January and April) and the growth is remaining steady:

The macro tells the story: general SWE hiring has been flat to down since 2022, while AI-titled roles climb.

A couple recruiters told me their hardest reqs right now include “AI Engineer” and “ML Infra,” even though half their execs can’t define the difference.

My takeaway: While there is some hype, and lots of variety in salary packages, the need is absolutely real.

“AI Engineer” has already become technical job boards’ north star. It’s the technical role of the year, and those who earn it are in a position to quietly wire the future of software into production.

Who’s hiring AI Engineers

What’s clear is that AI Engineers are becoming a staple headcount line across labs, enterprises, startups, consultancies, and the public sector.

Start with the giants.

OpenAI, Anthropic, Meta, Google, Microsoft — for the past 18 months, they’ve been scooping up AI Engineers like oxygen masks.

At these labs, the job isn’t tinkering, it’s scale. In ads and recommender systems alone, scale is its own universe. “You need to understand the whole stack — candidate generation, ranking, re-ranking — and how it ties into the ads ecosystem from ingestion through bidding,” said Vinija, the ex-Meta engineer now at Google. “Then there’s the constant drift — data drift, model drift, and recommendations going stale. It’s complex, practical work.”

These big AI labs don’t need someone to write prompts; they need people who can take bleeding-edge research and wire it into services that won’t implode when millions of users hammer them at once. Copilot, Gemini, Claude — all of them are powered by AI-savvy researchers and engineers who can make those models live in production, meet latency targets, and pass compliance audits.

Battling it out inside the AI arms race

What doesn’t show up in job postings is the quieter arms race. At the big labs, AI Engineers are often embedded in infra teams focused on cost optimization — shaving millions off GPU bills by building smarter caching, routing, and inference layers. It’s not glamorous, but it’s where fortunes are made or lost. A single bad architectural call at that scale can burn more cash than an entire startup ever raises.

Enterprises are different. Banks, insurers, healthcare giants, retailers — they’re all bringing them in, too.

Scroll JPMorgan’s listings and you’ll see “AI Engineer” roles tucked inside risk and compliance. The reason is simple: regulators are circling. If a chatbot gives bad financial advice, that’s more than bad UX, it’s liability. If a healthcare model leaks patient data in a triage app, that’s not just a bug, it’s a HIPAA violation. These companies want AI Engineers to ship products, sure. But let’s be clear: they also need them to keep them lawsuit-proof. Call it the “quiet compliance engineer” version of the role.

Startups are yet another story. In healthcare, fintech, edtech, an “AI Engineer” is often a one-person army duct-taping APIs to get a demo out the door then scrambling to turn it into something investors will actually fund.

I’ve seen Series A startups in Palo Alto advertise for AI Engineers when what they really needed was a Swiss-army developer: someone who can hack a retrieval system, wrangle a vector database, and sketch product design without blinking.

In reality, the “AI Engineer” title is less about specialization and more about survival — and about marketing to VCs as much as to candidates.

Then there are the consulting firms. The Big Four are spinning up “AI practices,” which in practice means embedding engineers into client teams to modernize legacy systems. Think of it as AI staffing with a white-collar sheen.

These engineers are translators in the purest sense: making outdated insurance platforms or government workflows look AI-native without actually rebuilding the core.

What’s less discussed: governments and defense contractors are on the hunt too. DARPA, Lockheed, and the alphabet soup of agencies are all posting for AI Engineers under titles like “applied AI systems” or “autonomous systems engineer.” These aren’t jobs you’ll see trending on Hacker News, but they represent a massive undercurrent of demand, tied to AI’s role in defense, intelligence, and national competitiveness.

Zooming back out, “AI Engineer” is less a single job than a catch-all flag. And the fact is that companies hoist it for different reasons:

- Labs want infra stability and cost optimization

- Enterprises want compliance and risk mitigation

- Startups want a Swiss Army knife who can ship

- Consultancies want billable talent in client slides

- Governments want technical leverage in defense

The common thread is leverage. Every company is trying to bend AI to its agenda, and the AI Engineer is the one asked to make that leap real.

Where research meets revenue

Prototypes don’t ship themselves (yet). Research models may wow in demos, but they usually crumble on latency, cost, privacy, and edge cases. The deeper I dug into this piece, the more AI Engineers looked like the “conversion layer” — the ones turning proofs-of-concept into products that can survive compliance reviews, hit SLAs, and avoid melting the GPU budget. In a market where every competitor can call an API, the moat isn’t access to a model, it’s the team that can make it reliable, observable, and cheap at scale. That’s the leverage executives actually pay for, and the reason this role is becoming indispensable.

Across labs, banks, startups, and consultancies, agendas differ — scale, compliance, speed, billables — but the stress lands in the same inbox. It’s the AI Engineer who gets paged whether a model returns garbage in front of a customer or when an inference bill quietly explodes.

That’s a reality worth zooming into: what the role actually feels like, hour by hour.

Anatomy of an average Tuesday for an AI Engineer

I met an AI Engineer in Mountain View last week who laughed when I asked what his job actually looks like. “Depends on the day,” he said, then walked me through one.

8:30 a.m. — Slack is on fire. A product manager is panicking because the copilot feature is returning gibberish for enterprise customers. The engineer triages: is it the model, the prompt, or the retrieval layer choking on bad data? Usually, it’s all three.

11:00 a.m. — He’s knee-deep in API logs, tracing calls that ballooned a cloud bill by $50K overnight. “Half this job is playing detective,” he told me. “Not glamorous, but if you don’t track costs, you’re toast.”

2:00 p.m. — A design review. The PM and designer want to expand the chatbot’s scope. He pushes back. “Every time scope creeps, we inherit hidden debt. So, more evals, more edge cases, more fire drills.” You can feel the tug-of-war between ambition and reality.

6:00 p.m. — Finally, he’s back in Jupyter notebooks, fine-tuning a small model to make the larger system less brittle. “This is the only part that feels like pure engineering,” he admitted. “The rest really is glue work.”

On top of all that, there’s the invisible load: writing evals that no one outside the team cares about but that make or break a release, sitting through compliance reviews to prove the model isn’t leaking sensitive data, and keeping one eye on GPU dashboards to make sure today’s experiment doesn’t blow up next quarter’s budget.

Every AI Engineer I’ve spoken to mentioned this same flavor of tension. Half the job is invention, the other half is keeping the business from overreaching faster than the tech can actually support.

“Some days I feel less like an engineer and more like an air-traffic controller,” one told me. “You’re juggling prompts, infra, compliance, and costs all at once. If you drop the ball on any of them, something crashes.”

And then there’s the emotional tax no one puts on job listings. That comes with the stress of shipping in a space where nobody has a playbook or precedent.

“Everyone wants AI features now, but no one wants to pay the cost of running them. So we’re asked to do magic with duct tape,” the same soft-spoken engineer told me, subtly shaking his head.

Then he looked up. “What makes it worth it isn’t the salary. It’s the rush of getting something impossible to work. Watching a model that was spitting nonsense suddenly deliver a clean, reliable output in front of a real user. That’s the high. That’s why I do this.”

It’s the same high Eledath described on a bigger canvas, looking back at his work building Asurion’s voice AI system from scratch. “I wrote the first line of code and now we have Fortune 500 clients,” he shared. That arc — from hackathon hack to production fire drills, from one line of code to helping massive brands — captures the velocity many AI Engineers now live inside.

I began to realize that what drives the developers behind these dashboards isn’t just comp or prestige, it’s the thrill of being first to forge the future and ship it into production. And that, I think, is why so many developers are clawing toward this role, even though the path into it is anything but straightforward.

How to get hired: portfolio over pedigree

In hiring, the ladder is forming. Universities are updating, bootcamps are iterating, but teams still rank shipped work above everything. The fastest path in is simple: build with AI and ship something people use — a working repo, a retrieval pipeline, a small Copilot in a live product.

Credentials help at the margins; proof of work carries the offer.

The pattern I kept hearing from developers who backed into this job is simple: they built something, usually a side project that actually gained some traction — could be a GitHub repo showing a retrieval system, a Copilot-like integration inside a company product, or something similar. But it was almost never a certificate, a MOOC, or another bullet on LinkedIn. They shipped real code that the world found useful for something.

One engineer hiring for an AI role on his team told me flat out: “Every applicant waves around a certificate. I look for people who’ve actually built something ugly that works and have used AI to do it. That’s the big thing for me: what have you built, and how comfortable are you using AI?”

So if you’re sitting outside this world and wondering how to get in:

- Start where you are. If you’re a SWE, wire an LLM into your current stack. For example, build a Slackbot using OpenAI’s API quickstart or Anthropic’s Claude cookbook. If you’re a data scientist, focus on deployment and infra: try standing up a small model using FastAPI or Ray Serve. The bridge matters more than the label.

- Learn the stack no syllabus covers. Prompt orchestration, retrieval, evals, cost optimization. These are what separate builders who can hack a demo from those who can keep a system alive at scale. Start small with LangChain’s docs or LlamaIndex tutorials, then explore evaluation frameworks like TruLens or Ragas.

- Build in public. Push projects to GitHub. Ship something to the App Store or Hugging Face Spaces. Even if it’s janky, you’ll learn faster than grinding through another online course. Check out Hugging Face Spaces for inspiration, or join an AI hackathon like LabLab.ai to pressure-test your skills.

And of course, you need practical skills. But you don’t need to be schooled in AI history or theory. As Eledath put it: “Having a deep understanding of the theory behind LLMs, while helpful, is not that important. What matters more is being product-minded, strong in systems thinking, and comfortable as a software engineer.”

The facts are this: companies don’t need more paper-qualified AI dreamers. They need engineers who’ve already been in the mud. Certificates (AWS ML Specialty, TensorFlow, etc.) can help with screening, but they’re a tiebreaker at best. I’ll say it again: in this market, proof of work beats proof of study every time.

AI Engineers will go from unicorn to ubiquitous

So where does this all go?

In the short term, AI Engineers will command premium salaries and look like unicorns — half-engineer, half-magician. But the role won’t stay rare for long. Just like “webmaster” disappeared into every developer job, AI Engineering will seep into the DNA of software teams. Within five years, “AI literacy” will be table stakes and not a specialty.

So, short term, premiums will hold. Medium term, the role will branch into specialties — retrieval, agents, evaluation, cost and infra, AI product. Long term, AI fluency becomes table stakes across software, with AI Engineer as a defined path inside most org charts.

Some work will fold back into general SWE, as always happens, but the applied AI surface area is large enough that dedicated AI Engineering tracks will persist.

Zoom out and the pattern is this: every piece of software is becoming AI-infused, and someone has to wire that future into reality. For now, that person is called an AI Engineer.

AI Engineer is the job that turns intelligence into software people touch. In five years the title will feel ordinary. It will describe the first wave who learned to speak code and context and wired that skill into operating systems, chat windows, and dashboards. The novelty will fade, and the code they ship now will become the quiet foundation the next decade runs on.

➜ See HackerRank in action with a personalized demo