Hire & upskill the next-gen developer

July 2025 Release

Dive in and explore the latest features

Screen

Test Variants

.gif)

You can now create a test with multiple variants and the appropriate one will be shown based on the candidate's preference at the start of the test.

Simplified Test Settings

.gif)

Test settings are now easier to manage. They are now grouped into three sections: General, Test Content, and Test Administration. The General section covers the test name and basic info. Test Content lets you control question visibility and sections, and Test Administration handles availability, proctoring, and candidate experience.

Invite Expiration Control

.gif)

You can now define how long a test invite stays active directly at the test level. Set a specific end date or define a custom duration. This gives you flexibility to tailor expiration windows without being tied to a global default.

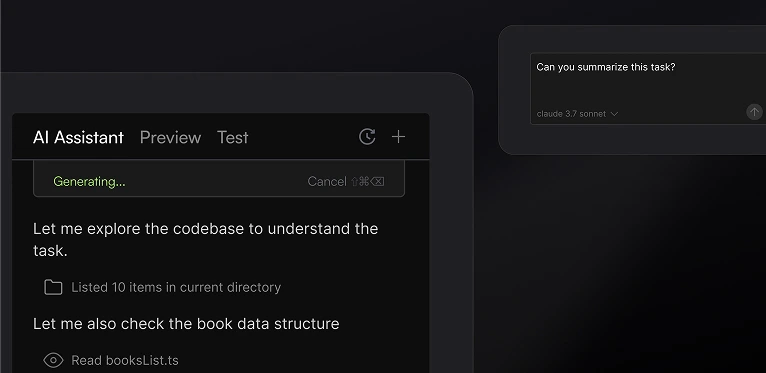

AI-Assisted IDE in Tests

.gif)

Upgrade your tests with an AI-assisted IDE that offers candidates contextual help with syntax, templates, and platform guidance. The AI Assistant supports Coding, Frontend, Backend, Mobile, Full-Stack, and Code Repo question types.

Advanced Evaluation

.gif)

As the role of a developer changes and AI assistance becomes the norm, the evaluation of a developer goes beyond code correctness and optimality. The advanced evaluation assesses developers on code quality, usage of AI and other parameters giving a holistic view of the developer

Interview

Whiteboard Improvements

%20(1).gif)

The Whiteboard is now faster and more intuitive to use. Text resizing, shape editing, and image pasting from tools like Google Docs now work more smoothly and reliably.

Scorecard PDFs for Workday and Greenhouse

%20(1).gif)

You can now access the PDF version of the interview scorecard directly within a candidate's profile in Greenhouse and Workday.

Interview Transcription

.gif)

Admins can now turn on the ability to transcribe their interviews. Powered by Zoom’s SDK, transcripts capture all audio interactions and are easily accessible via email, reports, or API. Admins also have complete control over transcription settings to match your workflow and privacy preferences.

Scorecard Assist

Scorecard Assist fills the Interview scorecard using transcripts, code, test cases, and your rubric as inputs. This frees up the developer's time from writing one from scratch, and hopefully, recruiting coordinators don't have to chase people to fill this in.

AI-Assisted Interviews

You can now turn on AI assistance for candidates in the interview product. The AI assistance comes with inline assistance, a chat panel on the right and an agent mode. The IDE mirrors a modern developer setup.

Integrity

Enhanced Proctor Mode

%20(1).gif)

Proctor Mode now brings AI-powered integrity monitoring to more question types, with session replay, webcam tracking, and automatic screenshot analysis. You can review a full session replay with flagged events like tab switches or multiple monitor use, making it easier to spot suspicious behavior without too much effort.

Smarter Integrity Signals

.gif)

Interview integrity is now more contextual and focused. Instead of flagging isolated actions like tab switches or pastes, the system looks for patterns of behavior, grouping signals and classifying them as silent, grouped, or critical. You’ll only be alerted when multiple actions suggest a genuine concern, reducing unnecessary noise. A new real-time status widget gives you live visibility into candidate focus, screen sharing, and monitor setup.

Screen-to-Interview Identity Match

.gif)

Ensure that the candidate who took the screening test is the same person attending the interview, without adding friction to the process. Candidate images are automatically captured during both stages and matched using facial recognition. If the similarity score falls below a predefined threshold, a real-time alert is triggered, giving you a chance to act without disrupting the session.

Developer Experience

New candidate experience for Projects

.gif)

The newly redesigned candidate experience has been expanded to cover more question types: Frontend, Backend, Mobile, Full-Stack, GenAI, and Sentence Completion, in addition to previously supported Coding, MCQ, and Database questions. As part of these updates, candidates can now easily reset a project and see integrated output traces within the IDE terminal, enhancing visibility and reducing context switching.

Submission Check for Data Science Assessments

%20Submission%20Check%20for%20Data%20Science%20Assessments(webflow).gif)

Candidates now get automatic checks when submitting their results in Data Science assessments. The system flags common issues like missing files, incorrect column names, or wrong data types. This reduces preventable errors and helps ensure candidates get credit for the work they’ve done.

Upgraded JupyterLab IDE

.gif)

The Data Science IDE now supports JupyterLab 4.4.1, giving candidates faster performance, custom layouts, and a cleaner UI for large notebooks.

GPU Support for High-Compute Questions

.png)

As question complexity increases for compute-heavy use cases like TensorFlow for Machine Learning and Data Science roles, you can now request GPU-backed environments to ensure smooth execution and an optimal candidate experience. This enables you to assess skills more accurately without being constrained by platform limitations.

DevOps Assessment Improvements

DevOps Library questions now load up to 50% faster, cutting wait times from over four minutes to around two. Terminal lag has also been reduced, so the environment feels more responsive right from the start.

Upgraded Language and Framework Support

.png)

The HackerRank platform now runs on the latest versions of today’s most-used languages and frameworks, including React, Node, Angular, PHP, Django, Spark, and more. This helps you assess developers in environments that reflect a modern development experience.

Engage

AI-Generated Banner Images

You can now create polished, professional banner images for your hackathons in seconds. Just describe what you need using the guided prompt, and the system generates custom visuals featuring your event name. You can regenerate up to five times to find the best fit.

Auto-Publish Ads to HackerRank Community

You can promote your events directly to HackerRank’s global developer audience. From the Promotions tab, add your event details and publish instantly. No manual setup or waiting required; your ad goes live as soon as you’re ready.

Registration Insights on Home Page

Your event overview page now provides a visual breakdown of views versus registrations. This helps you understand which marketing channels drive signups and where to focus your efforts.

Better Email Sequence Management

All event emails, from confirmations to reminders, are now organized in a single place. Under the new Outreach tab, you can review, edit, and manage your full email sequence, saving time and keeping communication consistent.

SkillUp

New Learn Tracks for GenAI Skills

You can now help your teams build foundational GenAI capabilities through hands-on, tutor-led learning paths. New tracks include Prompt Engineering, Retrieval-Augmented Generation (RAG), and Agent Building - each designed to reflect how developers work with AI in production.

Custom Certifications

You can now turn any custom test from HackerRank Screen into a SkillUp certification. Developers complete the assessment through a guided experience, and earn a verifiable SkillUp certificate that’s trackable through your dashboard.

Developer Community

Platform Enhancements

Skills Platform

Flox Support

.png)

You can now create custom questions using the Flox package manager. This helps teams that have custom setups test candidates in consistent, reproducible environments.

iOS Assessments

Assess iOS development skills in an environment that mirrors how real apps are built. Candidates write Swift code, use frameworks like SwiftUI, and run their work in a built-in emulator.

Expanded Content Library

.png)

The content library now includes 99 new coding challenges and 41 new project tasks across high-demand roles. You’ll find new content for AI/ML, mobile, data science, QA, cloud, and more, giving you more ways to assess role-ready skills.

Content Quality Upgrades

.png)

Over 70% of our hands-on questions have been rewritten to improve clarity and accuracy, making the experience smoother for candidates and more consistent for reviewers. Every update has been validated against our quality standards, helping you with faster reviews while delivering a great candidate experience.

Library Enhancements

Admin

Data & Insights